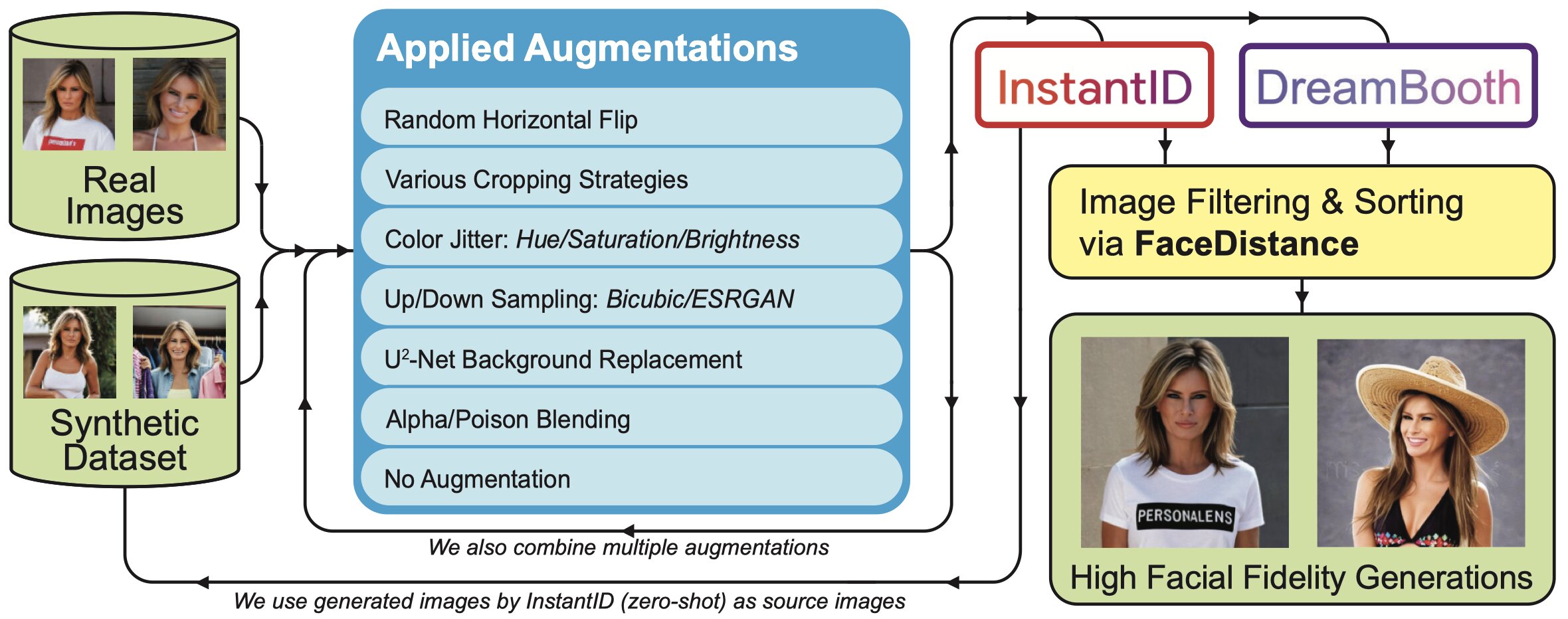

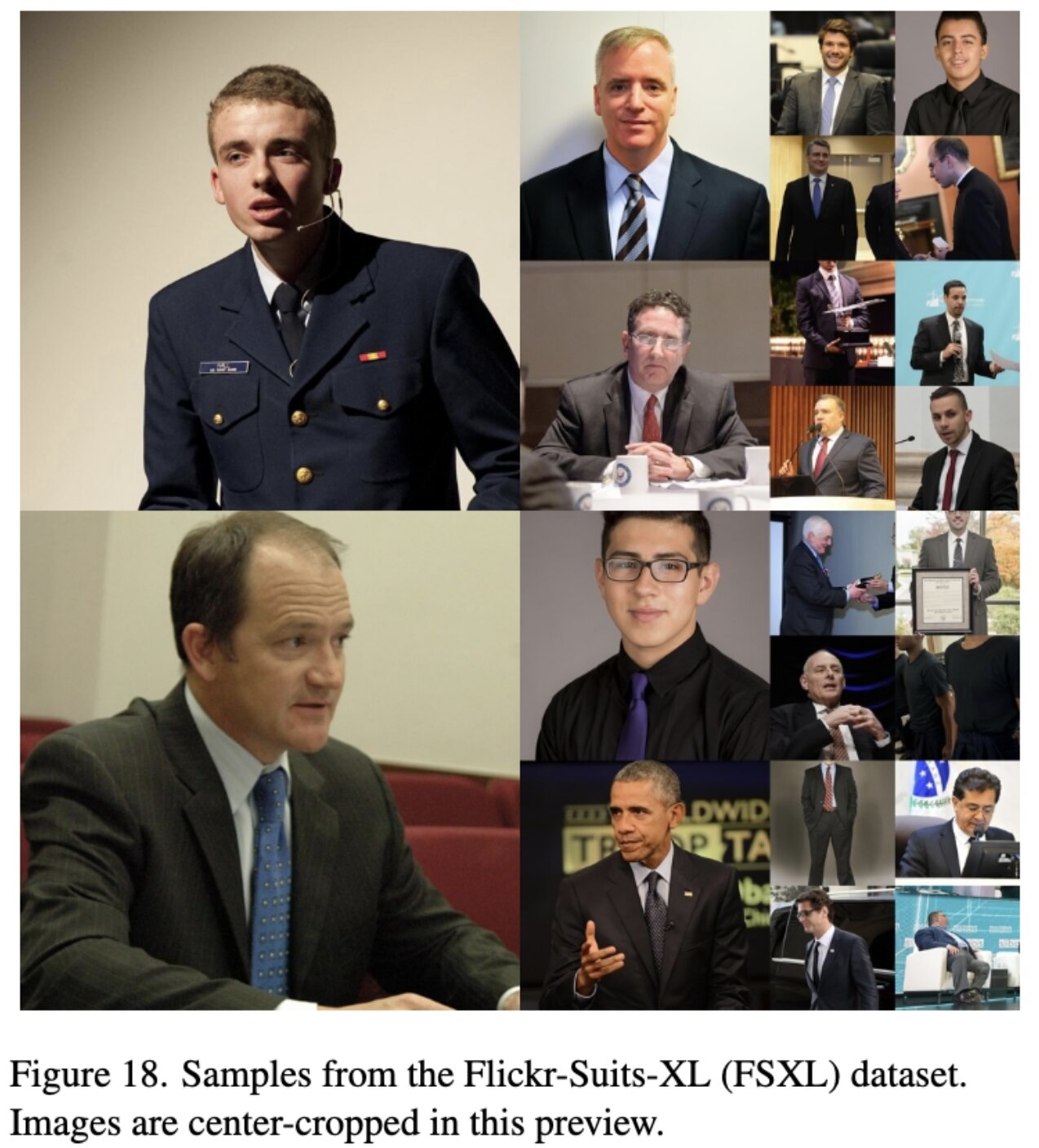

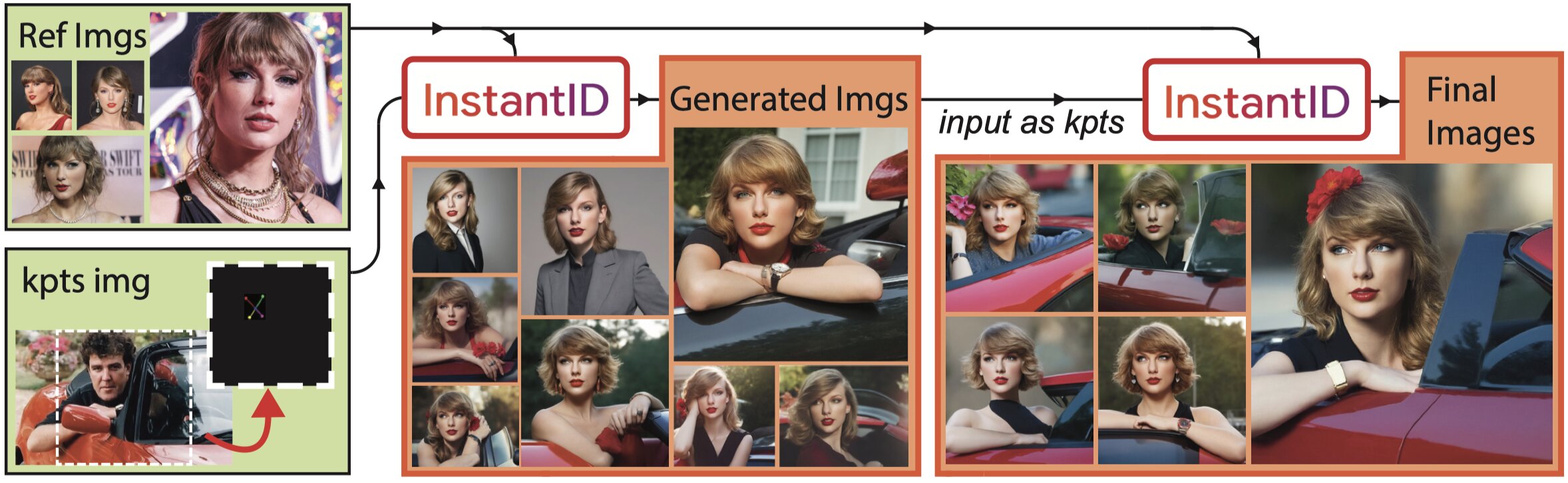

We analyze two distinct InstantID pipeline approaches for generating personalized portraits, each offering different trade-offs between facial similarity and

compositional control.

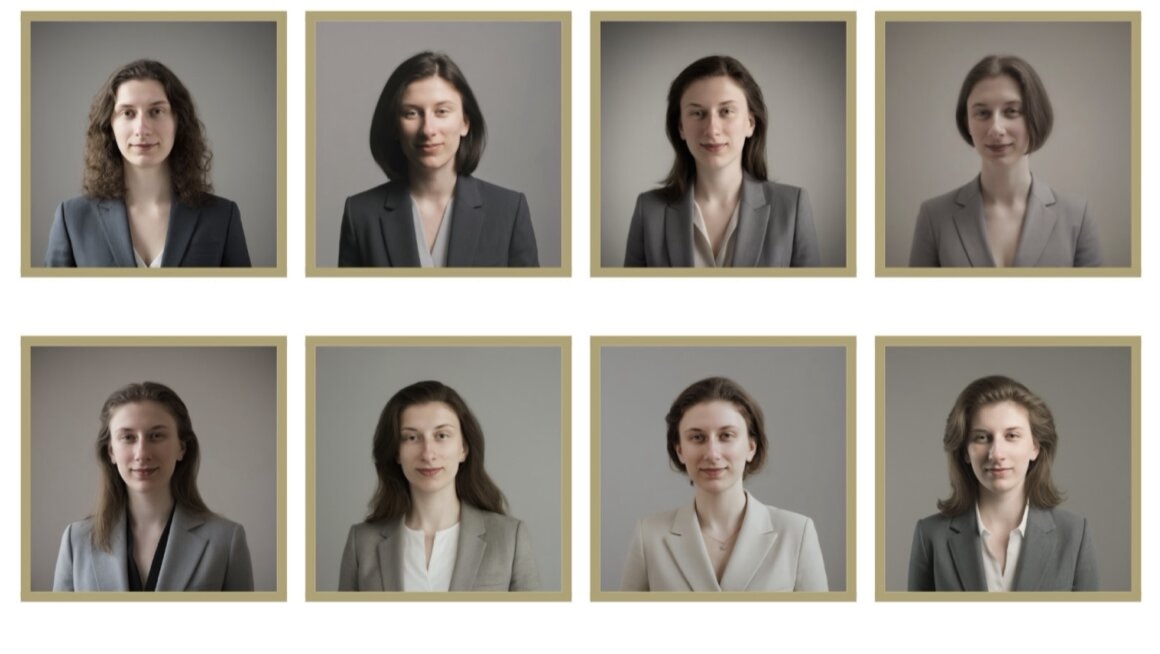

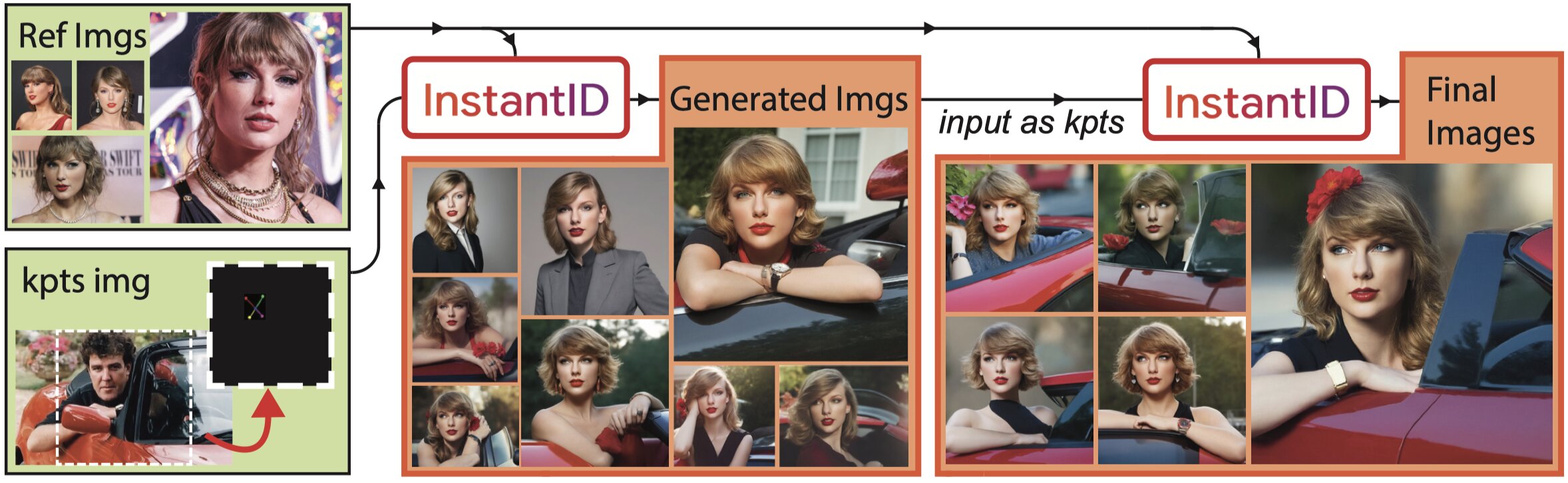

2-Step Generation

We collect subject reference images (s1, ..., sn) and a separate image

representing

the desired pose and composition skpts. These are used as reference images and the keypoints image, respectively. While the resulting output is

generally satisfactory, using facial landmarks from one person to generate another reduces facial similarity due to structural differences in the five keypoints

(eyes, nose, mouth). We hypothesize this stems from imbalanced conditioning weights. Performance improves when replacing skpts with a previously

generated image of the subject, yielding better facial similarity while maintaining compositional control.

InstantID 2-step generation: trade-offs between keypoints conditioning and subject-based conditioning.

Two-Step Generation Pipeline. Initial outputs use a keypoints image (skpts) from another identity, often reducing facial similarity. Replacing

skpts with a prior output of the subject improves identity preservation while retaining pose. Using four reference images offers a good

trade-off,

as demonstrated in the appendix. Despite the ease-of-use in downstream applications, this limitation motivates our face replacement method for greater

control.

Face Replacement

Users interact with a simple tool to manipulate (move/rotate/resize) their cropped face on a canvas matching the diffusion

model's output dimensions. This approach eliminates the similarity issues caused by using another person's facial landmarks. However, the method performs poorly

when none of the reference images show the subject facing the camera (deviations >30°). User satisfaction was higher with this approach compared to 2-step

generation, which we attribute to increased interactivity and faster generation times.