Personalizing Stable Diffusion for professional portrait generation from amateur photos faces challenges in maintaining facial resemblance. This paper evaluates the impact of augmentation strategies on two personalization methods: DreamBooth and InstantID.

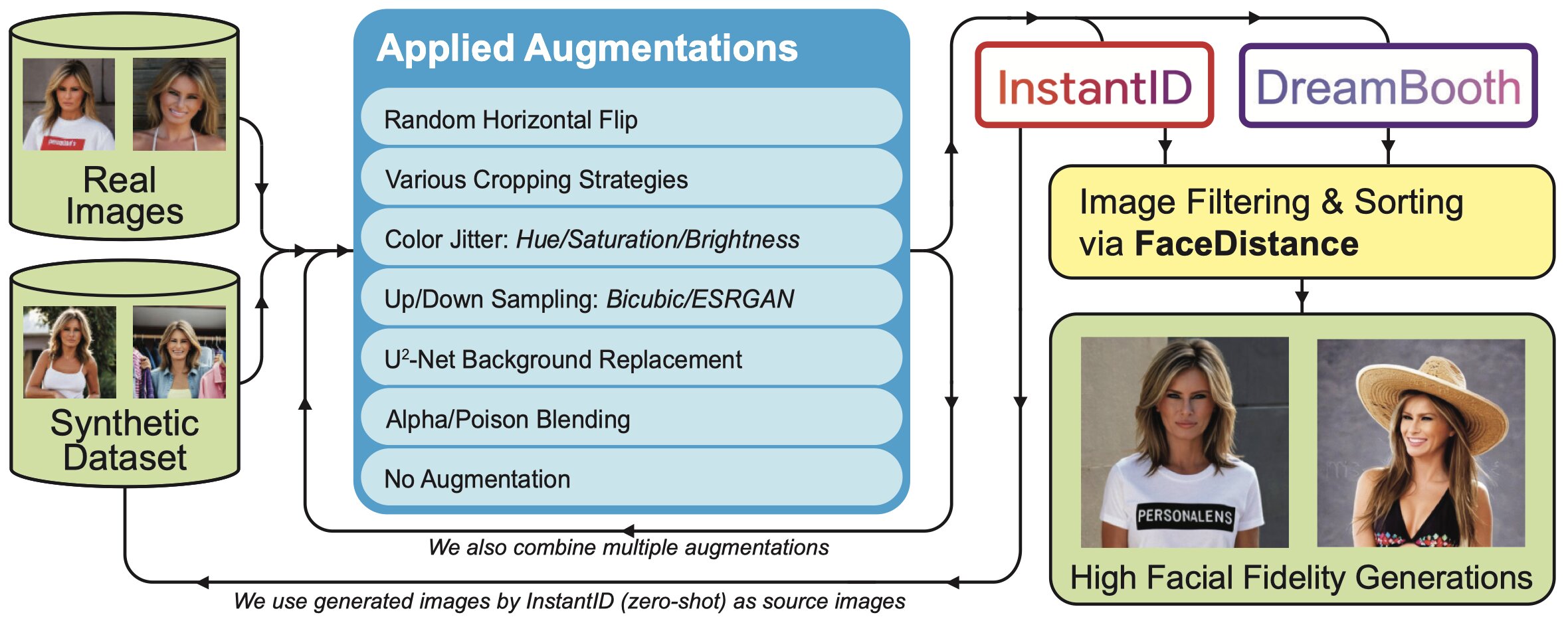

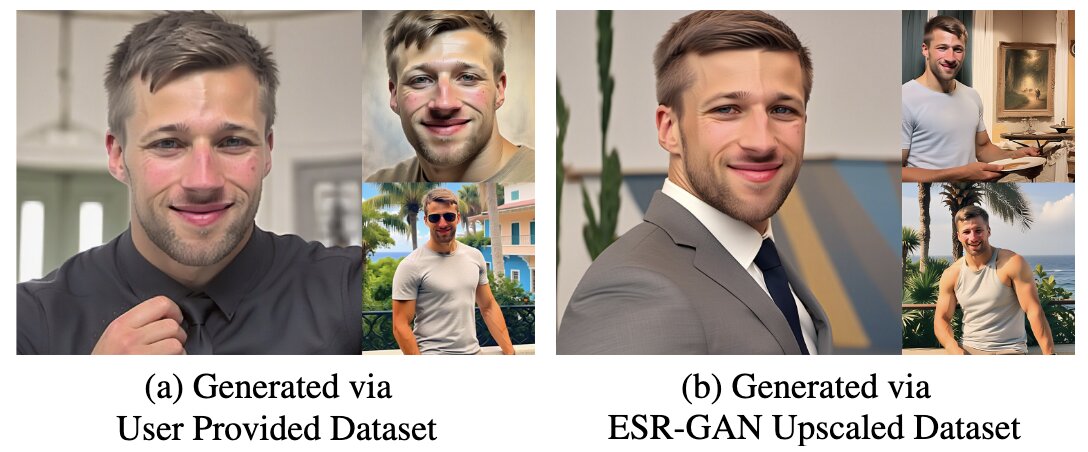

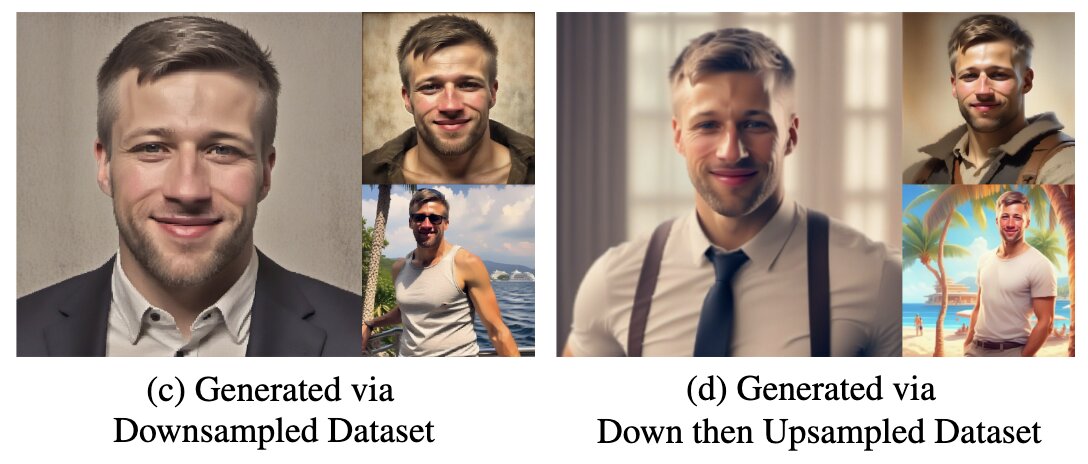

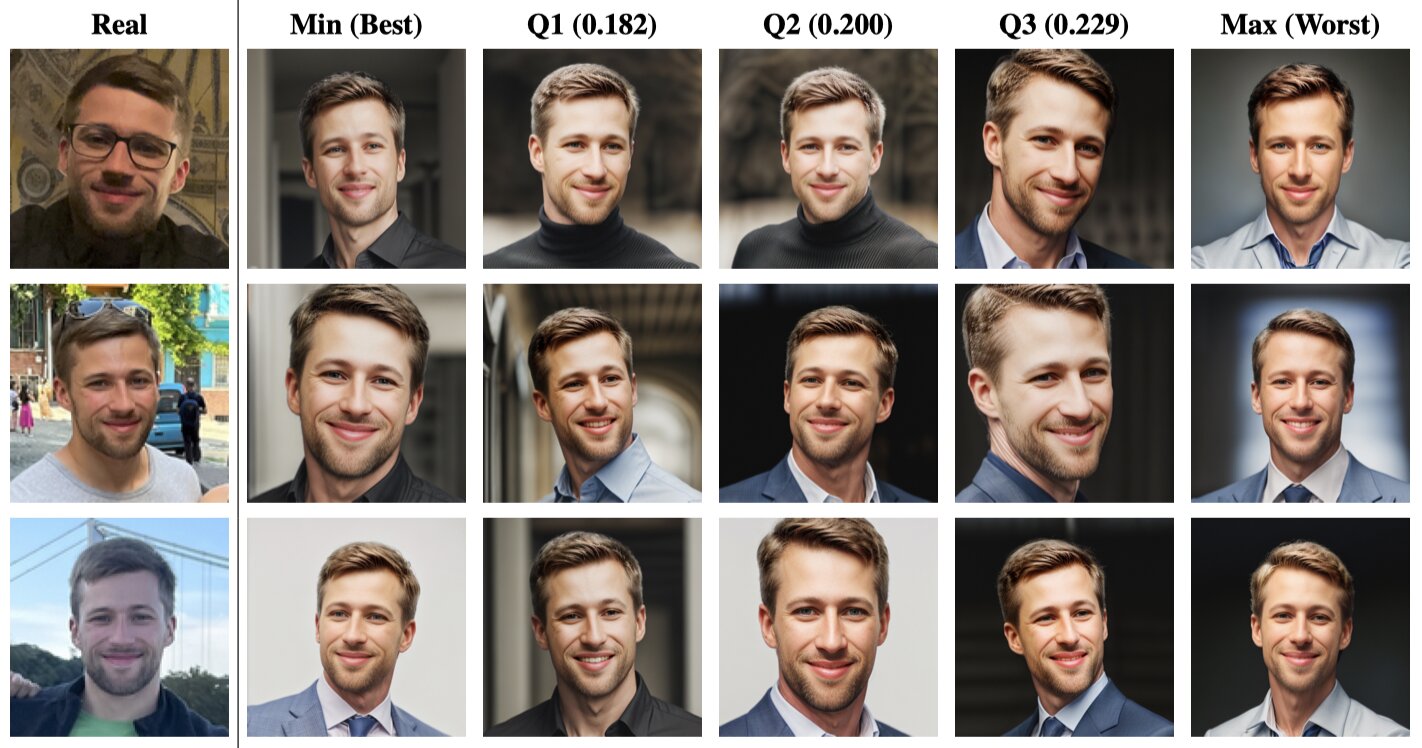

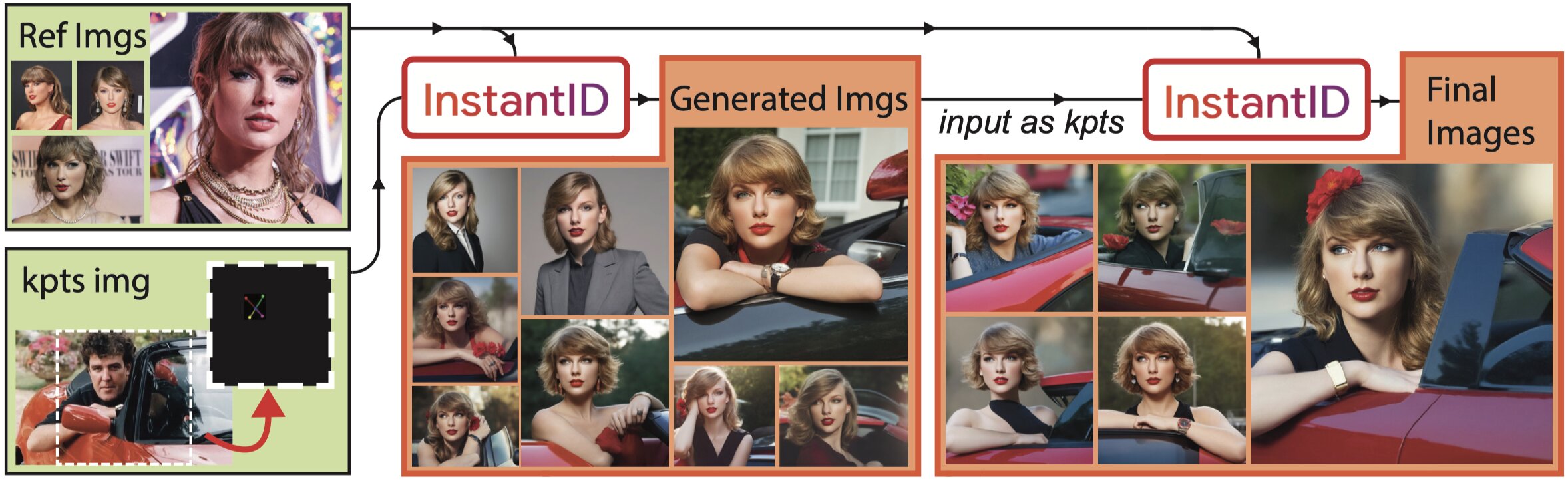

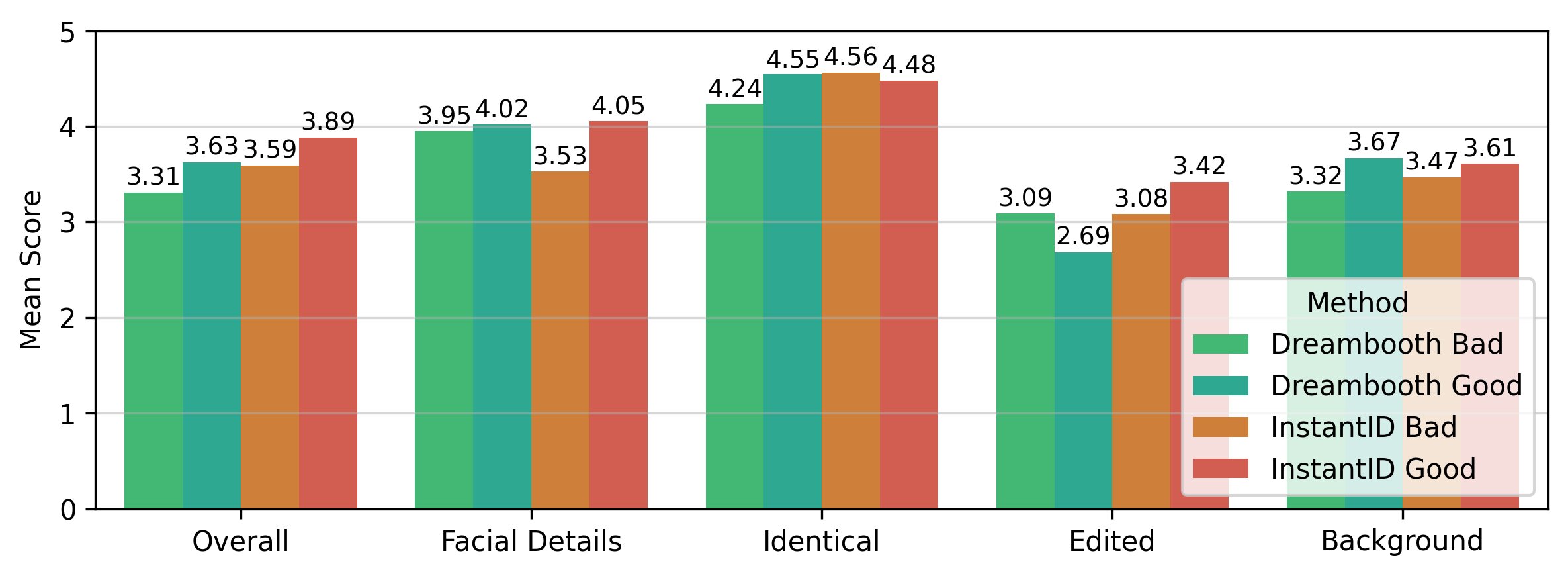

We compare classical augmentations (flipping, cropping, color adjustments) with generative augmentation using InstantID's synthetic images to enrich training data. Using SDXL and a new FaceDistance metric based on FaceNet, we quantitatively assess facial similarity.

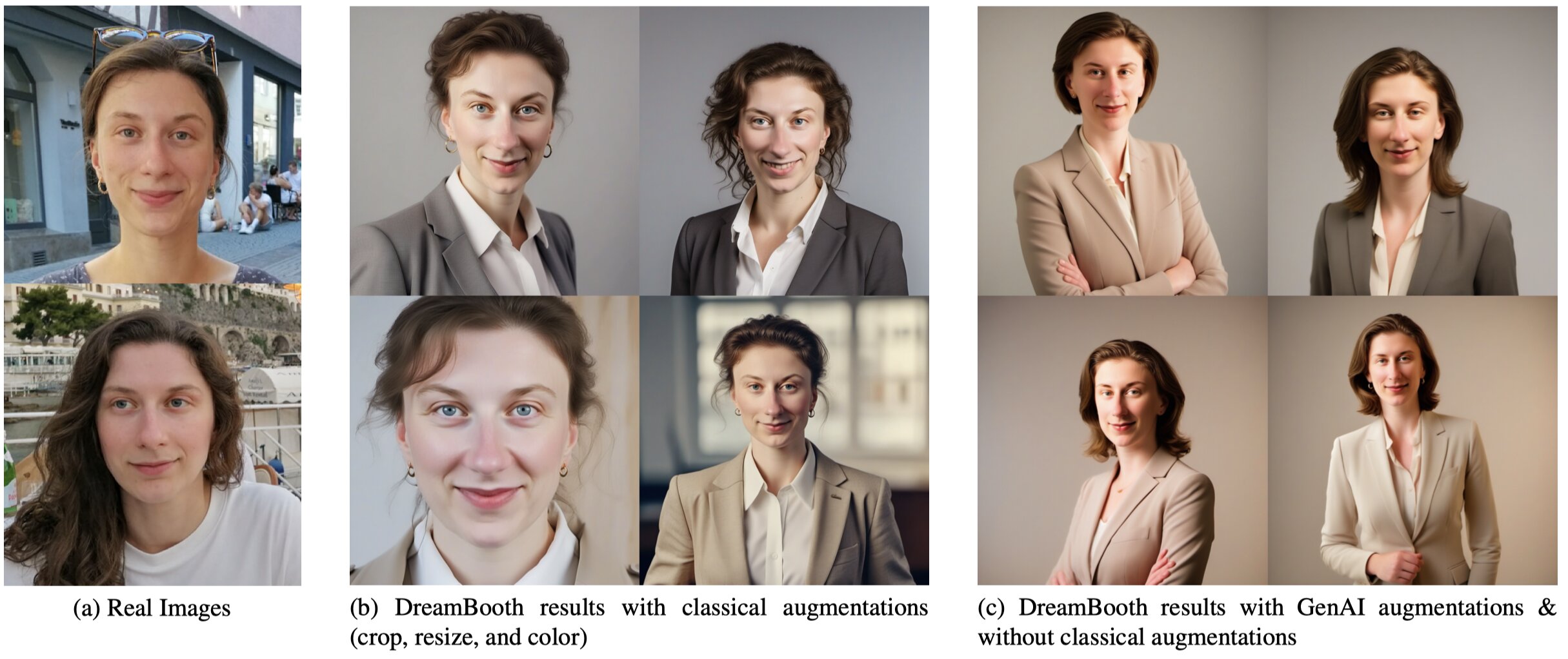

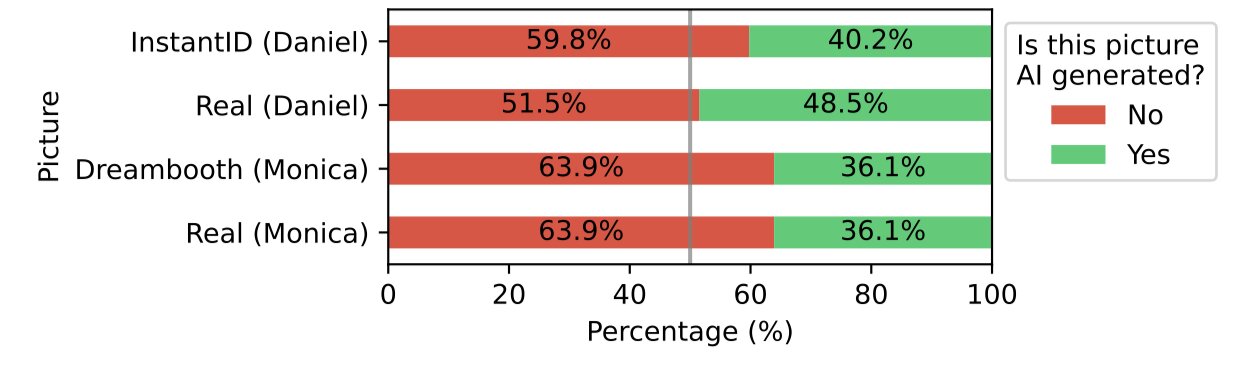

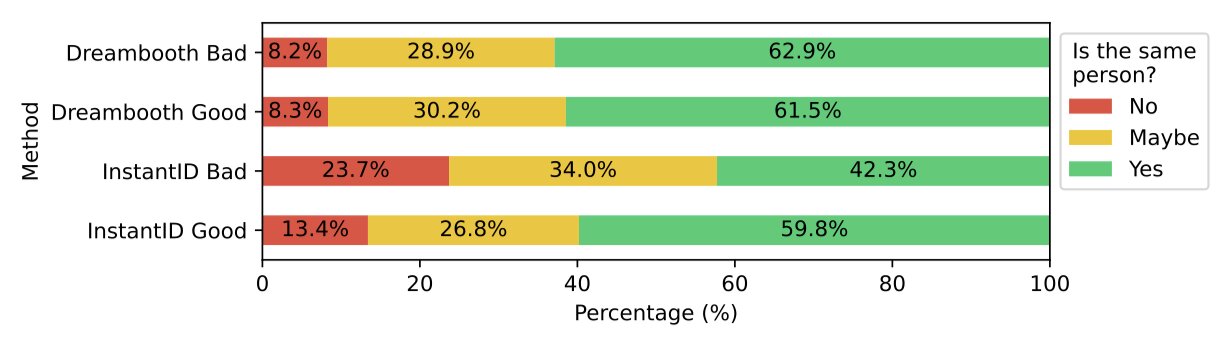

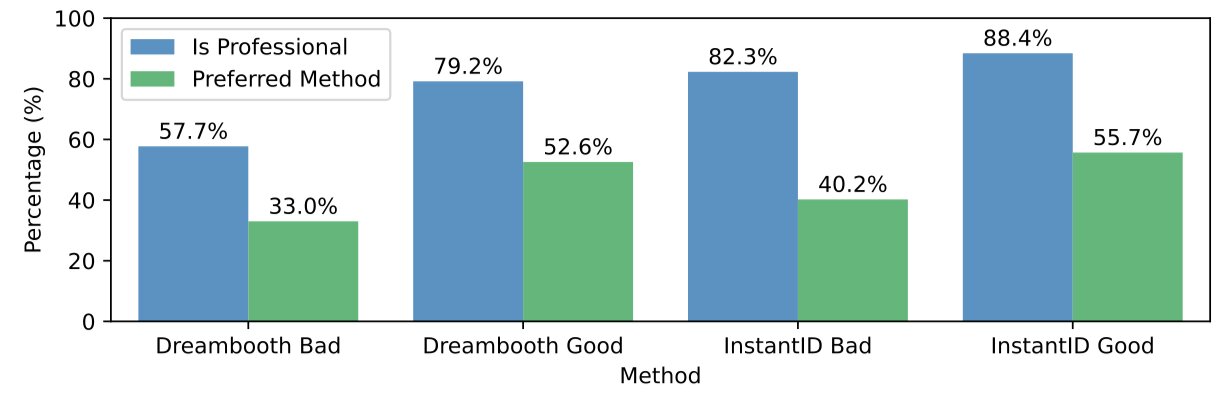

Results show classical augmentations can cause artifacts harming identity retention, while InstantID improves fidelity when balanced with real images to avoid overfitting. A user study with 97 participants confirms high photorealism and preferences for InstantID's polished look versus DreamBooth's identity accuracy.

Our findings inform effective augmentation strategies for personalized text-to-image generation.